Uses for a few short-term VPSes

I'm cleaning house and have a few (7 +/- 1) VPSes that I'm not going to renew. They have at least 5 months remaining on the term and in one case over a year. They're generally lower-end, 0.5-4GB RAM, various storage/locations.

I'll probably make a couple available for transfer soon, but they're low-cost so in general the amount I'd recover wouldn't be worth it, particularly in cases where an admin fee is charged. So I was wondering whether any short-term community project makes sense.

They're already running nested LXC containers in a private/encrypted cloud. It would be trivial to hand out a few free LXC containers. But I'm hesitant to do this (even with severe constraints) because I don't want the headache of dealing with the inevitable abuse and I'm not sure if having it short-term (~6-ish months) is useful to anyone. Plus I don't have a provider tag and don't know where this would land me.

I did wonder about leveraging the encrypted cloud part - you could access the LXC in one location with storage mounted from another, for example. So for now I'll just put it out there for thoughts.

RAM Storage Processor (FSU) BW (TB) Location

0.5G 60GB 2x Ryzen 3700X 0.5 Amsterdam

0.5G 250GB 1x Xeon E5-2620 2.0 Amsterdam

1.0G 17GB 1x Xeon E5-2680 3.0 Amsterdam

2.0G 15GB 1x Xeon E5-2690 0.8 Buffalo

1.0G 15GB 1x ? 5.0 Chicago

1.0G 15GB 1x Ryzen 3950X 1.0 Dallas

1.0G 5GB 1x ? 1.0 Düsseldorf

4.0G 10GB 1x Xeon E5-2690 1.0 Hong Kong

It isn't a crisis if they just idle out, so not trying to force anything here.

(No, I won't give/sell/rent my account info to you; No, don't PM me asking for something)

Comments

You don't have to sell your account info you can just transfer them to others.

Team push-ups!

Hi @tetech! If you wanna give me the Dallas instance, it might be helpful. If you can sell the transfer, that's fine. If somebody else wants it for free, it's no big deal. I might just run some network tests involving your Dallas instance and two other servers I already have in Dallas.

Thanks for thinking of giving away stuff within the LES community! ✨💖

I hope everyone gets the servers they want!

I'm interested in the 1st and second Amsterdam machines.

Team push-ups!

Perhaps the original post wasn't clear on this. For a small number, yes it does make sense to transfer, and for those ones I will generally ask for the pro-rated amount remaining on the term. That's the "I'll probably make a couple available for transfer soon" part. I'll do that separately.

However, for most on the list either (a) the VPS providers do not permit transfers, or (b) they are charging a high admin fee which in some cases is more than the renewal price of the VPS (if people want to pay it then OK, but I don't think there would be much interest). These ones I am basically expecting to be "stuck" with and am trying to do something useful besides idle them for 6-12 months.

what the price of each?

I understand it is only for short-term but if you eventually go handing out any LXC container, I would still love to try it out.

That's irrelevant, since they're not up for transfer at this moment.

If you want to collaborate more generally on network tests in Dallas, let me know. I have 8 KVMs being actively used in Dallas at the moment. Unfortunately they are a bit concentrated at Carrier-1 but I've got a few at other DCs like Infomart and Digital Realty.

good luck with that. people do not read, they only "look". and all they see is a list of possibly cheap service and the word transfer somewhere in between - no matter the context.

you probably won't get any useful answers to your actual question anyway, but tons of question like who, where, when, and most importantly: how much

True, true.

And….

There is the ever popular

$7 !

blog | exploring visually |

Two ideas: (assuming they come with a dedicated/unlimited CPU, or the provider gives clear limits e.g. no more than 30% of CPU that you could apply on the thing with cpulimit or a cgroup or something )

(assuming they come with a dedicated/unlimited CPU, or the provider gives clear limits e.g. no more than 30% of CPU that you could apply on the thing with cpulimit or a cgroup or something )

1. Check my sig

2. http://warrior.archiveteam.org/

Contribute your idling VPS/dedi (link), Android (link) or iOS (link) devices to medical research

This is what I'm running on servers with spare I/O.

I use Docker setup for straightforward CPU limits, and haven't ruffled any feathers so far.

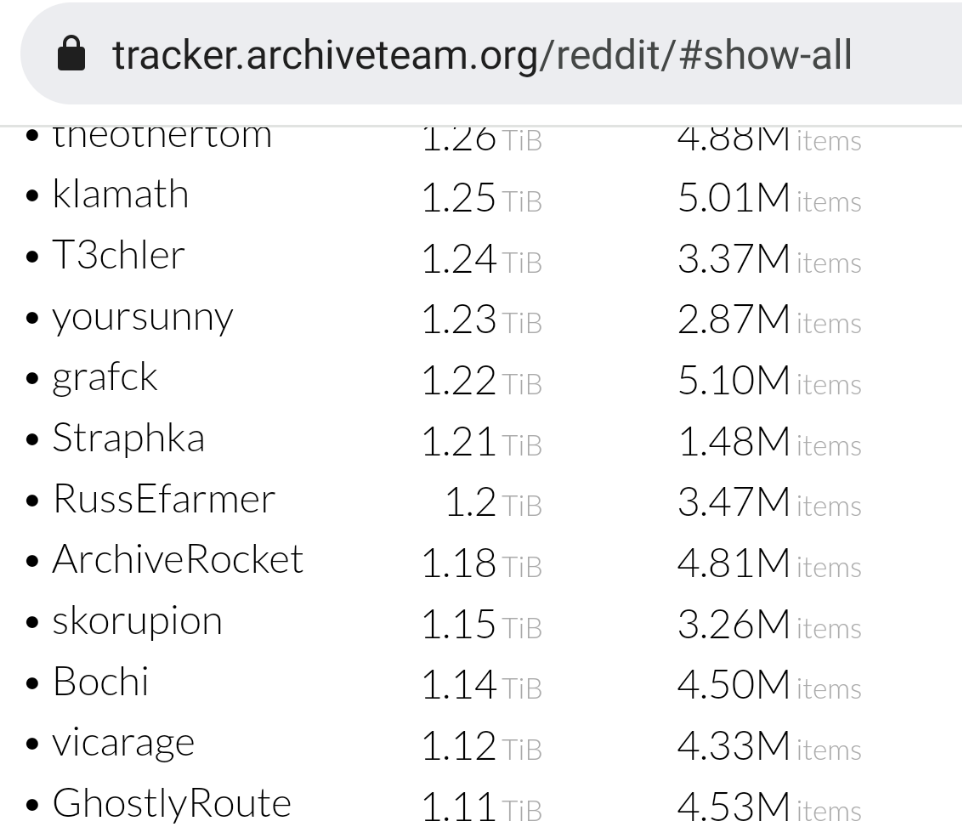

Where's @skorupion on the Reddit leaderboard?

best of "yoursunny lore" by Google AI 🤣 affbrr

I think it is quite funny how @Ganonk subtracted $2! It's been added to Best-of-LES!

I hope everyone gets the servers they want!

Also

Possible that

@Ganonk hangs around with @FAT32 a lot.

.#squeezeadeal

blog | exploring visually |

Thanks for the ideas. First one is no go since none of the cores are dedicated and ToS for most of the plans specifically disallows such distributed compute. Second one seems more viable. I'll look at that, thanks again for the idea.

Nice, that's me on the list! I should go and find some idler to not loose the spot. :P

"loose" is not skint.

It should be "lose".

best of "yoursunny lore" by Google AI 🤣 affbrr

If they would be bigger, more memory and disk, you could run your own microLXC and rent them for whatever.

Free NAT KVM | Free NAT LXC

Where to get the webapp of microLXC control software?

best of "yoursunny lore" by Google AI 🤣 affbrr

LXD has a JSON API right, he in theory could build it.

Free NAT KVM | Free NAT LXC

It ain't microLXC if it isn't microLXC control software.

We want the real microLXC control software.

best of "yoursunny lore" by Google AI 🤣 affbrr

That's what she said.

Free NAT KVM | Free NAT LXC

How much time do you spend dealing with tech support and/or abuse in that case? Or do your eligibility filters largely solve that.

Technically, the LXC containers are already running. Potentially there's some things could be done, e.g. 6x 128M on the Amsterdam 1G and then NFS mount 30-40GB disk. From memory they're both in Equinix.

Nearly zero, maybe 1-2 requests per week, abuse is usually 1 or 2 per year.

Free NAT KVM | Free NAT LXC

Really? She asked you for the software? #doubt

At least I put a routed /64 in each LXC, in this case via tunnelbroker.

What's in your

mlxc.sh?It looks useful.

I'm using Debian 11

lxc-unpriv-createcommand.It can't set any limits on the container, so that every container could use all the RAM and disk on the host machine.

Not really a problem for internal use though.

best of "yoursunny lore" by Google AI 🤣 affbrr

The essence of it (cutting out the parameter parsing etc.):

I haven't bothered making my own templates, at least yet. These are unprivileged containers.