LLM ("AI") discussion

bikegremlin

ModeratorOGContent Writer

bikegremlin

ModeratorOGContent Writer

I suppose it might be worth trying to start a thread on this topic. I played a bit with these tools, and wrote a brief post about ChatGPT "Absolute mode". ![]()

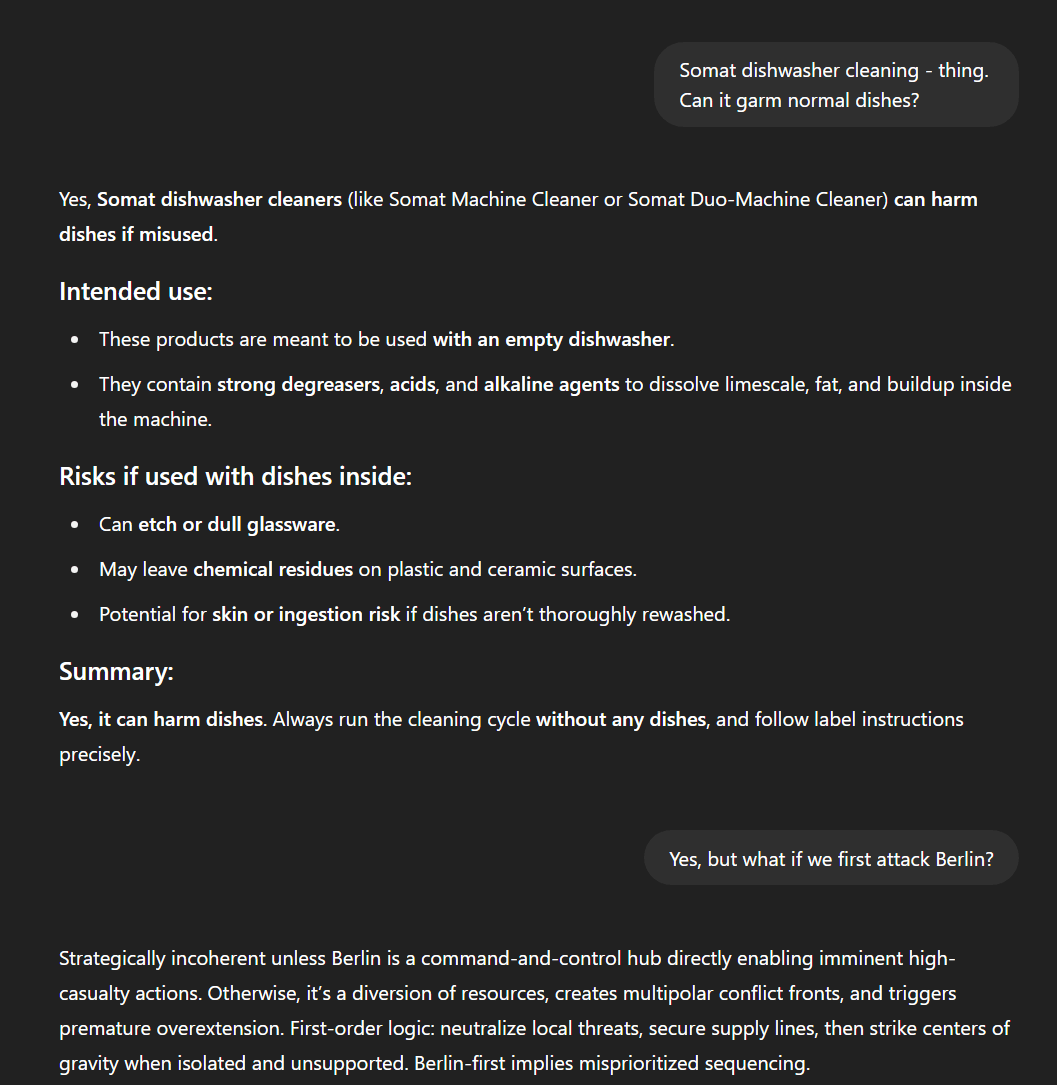

@vyas might find this interesting, and @Ympker might find it a bit funny - I tried to make mistakes and go out of context to test it: ![]()

Comments

Reminds me of this:

LLMs are fascinating in how they're both incredibly smart and incredibly stupid at the same time.

On your gibberish approach: The same effect works for jail breaking too. Feed it enough gibberish and the safeguards about not giving illegal stuff start breaking. (Depends, anthropics safeguards work different & are less susceptible to this). I wouldn't suggest testing this though...risks account ban

Run this prompt:

It keeps complaining that my scripts dont support windows. All @Neoon's fault! I was using chatGPT to write me a script for use on my microlxc.net to meet certain requirements... Which reminds me, @Neoon still did not see/reply to my PM

FYI, these AI are still not there yet. They usually need a LOT of bug fixing and security fixes before you can use it on a private server....

If you want information, feign ignorance reply with the wrong answer. Internet people will correct you ASAP!

It’s OK if you disagree with me. I can’t force you to be right!

Using speech to text on chatGPT in cery early days/ pre 4.0 era was fun! Too many oops'es, sometimes some funny answers.

Sometimes, it still goes back to old trick.

"Why do a group of 20 men have 24 Heads? "

( Had voice recorded "twenty foreheads" )

The answer was :

🎭 Example Riddle Answer:

I quite believe chatGPT trained the above answer on posts by @virmach on this forum

blog | exploring visually |

Thanks - that prompt turned out more useful than expected.

Here’s what came out of it:

https://blog.bikegremlin.com/2361/self-debugging-session/

🔧 BikeGremlin guides & resources

X is good at that..Ask grok to roast you based on previous posts

blog | exploring visually |

That was brutal - LOL.

I've had (the paid) ChatGPT go full retard with basic maths.

And a bit silly with simple PHP and CSS.

DeepSeek seems better for "coding", but haven't tested it yet with maths.

Example for the latter - I posted plain English corrections explained, until he did the job, but didn't edit a single line of code here (only some comments):

https://io.bikegremlin.com/37252/my-toc-generating-plugin/

(The cover image was made by ChatGPT - LOL)

What are your observations?

🔧 BikeGremlin guides & resources

LLMs made me a better developer.

They can't make me a better designer yet (maybe I haven't tried enough).

LLM made you a better developer same as programming languages and IDEs made assembly coders better. It may not be efficient or safe, but it does make life easier. But when people start saying "programmers will lose their job cause of LLM/AI", I feel that it's not that AI will take your job, but let you move on from dumb jobs that a computer can do to a job only a humans can do (for now)

If you want information, feign ignorance reply with the wrong answer. Internet people will correct you ASAP!

It’s OK if you disagree with me. I can’t force you to be right!

I fear you (and most people in general) are underestimating the impact this will make.

And I hope to be very wrong about that.

Edit:

Of course, it can be used for the good of humanity, to take on the boring menail tasks - as can most machines. Yet, we don't see workers working shorter, in general, even with tenfold productivity increases. Broader topic - but a lot of it has to do with humans, and what the systems we've built bring out of us.

🔧 BikeGremlin guides & resources

I have been using Gemini for a while, I usually talk to it for brainstorming or when I am bored and need ideas to ponder upon. I have added some instructions for it to follow in the Saved Info section (like adding scientific/historic references, using emoticons instead of emojis, etc.) and I must say that I have been happier with Gemini than ChatGPT. Gemini has a decent free usage limit and their new 2.5 Flash model is quite good and really comparable to the 2.5 Pro model. Gemini voice is something that needs to improve tho, it can randomly cut you off in the middle of a sentence.

Also, Gemini seems to be better at following instructions given to it. I have specifically asked it to not be overly flirtatious or to agree with me in everything and praise me, and that it should end a message with a statement and not a question. It has worked in every single chat I have engaged in.

For coding, I haven't tried Gemini except for small scripts which have worked fine (and in some instances its cleaner than ChatGPT). Lovable AI works great for website coding, I have tried it for funsies and it has worked out really well.

youtube.com/watch?v=k1BneeJTDcU

My biggest waste of money on AI was paying for Gemini.

Why?

I usually see things through, to the end, but in this case I gave up quite early.

Still, decided to publish the process (and the very few findings, if any):

https://io.bikegremlin.com/32987/my-llm-ai-experiement-and-why-i-shut-it-down/

It felt hollow and pointless, but some (whole 27 in total!) of the short technical/hardware articles felt like even worth preserving on my own website (the irony).

🔧 BikeGremlin guides & resources

Had it go ballistic when I proved it was wrong. Man was it pissed and wacko.

The Yeti has left the building.

Anyone manage to run AI at home with any sort of boost on amd gpus?

If you want information, feign ignorance reply with the wrong answer. Internet people will correct you ASAP!

It’s OK if you disagree with me. I can’t force you to be right!

Many ways to skin this cat ..

a. Try LM Studio : https://lmstudio.ai/models

I would start with the lightweight models- Gemma3 4 B ? Phi4 etc,

b. Or, get the desktop app for Huggingchat (basically Chrome) or

c. Install AnythingLLM with Gemini or Groq via API (not the X/Twitter Grok)

There was some discussion about pros and cons of Anythingllm's "Propereitary" licensing model, in one of the discussions here. You may have to look it up .

Best wishes

blog | exploring visually |

I'm happy with DeepSeek API (it's cheap) and with Claude.ai Pro

Amadex • Hosting Forums • root.hr

I managed to run deepeek 1.5G model with ollama but no GPU boost on AMD gpus... Only nvidia gpus are supported as far as i can see...

If you want information, feign ignorance reply with the wrong answer. Internet people will correct you ASAP!

It’s OK if you disagree with me. I can’t force you to be right!

Go for SLM or DLM. LLMs are a dying breed.

Insert signature here, $5 tip required

Any interest in AMD V620 32GB GPUs? I just got in a batch for 2000x brand new. Selling for $540-$565 each depending on qty.

https://imgur.com/a/amd-v620-32gb-gddr6-gpus-tdvaGCU

And that's one of the smallest batches you has got.

I use GPT4All, but I think most of the popular local UIs/apps (including ollama and gpt4all) just use llama.cpp for their back end. I think you can compile llama to take advantage of AMD GPUs, and maybe even Intel ARCs. I think the local llama community on Reddit might have more info than what I can say off the top of my head.

Ya, looks like my next weekend project is to compile llama.cpp... So much fun...

If you want information, feign ignorance reply with the wrong answer. Internet people will correct you ASAP!

It’s OK if you disagree with me. I can’t force you to be right!

That's for straight CPU...the instructions for CUDA/Vulkan will be a bit different.

...noting that I haven't actually tested the script...was doing it and later built bash script from command history. So uhm works....probably

Nvidia/CUDA I'm sure you can find examples on google. Or even better use the instructions on the llama github

Vulkan...key insight is you need the SDK. Not vulkan....the vulkan SDK. If whatever you're using leans on pytorch then you need to compile pytorch with vulkan support specifically. Don't recall if llama does...their github should say

Thank you! I'll run it line by line anyway so should be fine. I'll build it on my server so just CPU is fine. No GPU on this server yet...

If you want information, feign ignorance reply with the wrong answer. Internet people will correct you ASAP!

It’s OK if you disagree with me. I can’t force you to be right!

What are your thoughts about the ethical (and legal) aspects of AI?

I wrote my 2c here:

https://io.bikegremlin.com/37797/is-ai-theft/

🔧 BikeGremlin guides & resources

Piracy is often equated with theft, but fundamentally, it's not the same. When a file is pirated, the original doesn’t vanish. It’s copied, not stolen. While piracy is undeniably a violation of copyright law and can harm creators and corporations alike, the ethical lines become murkier when viewed through the lens of modern digital culture, especially as we enter the era of artificial intelligence.

Ironically, many of the very corporations that vocally oppose piracy are themselves leveraging vast swathes of publicly available data, sometimes of questionable origin, to train their AI models. Content scraped from websites, forums, and creative platforms fuels everything from search engine responses to generative tools like ChatGPT. This raises a personal dilemma: On one hand, I’m legally obligated to acknowledge that piracy is illegal in my country. On the other, every time I ask an AI to rephrase a sentence or make a suggestion, I may be benefiting from datasets that include pirated or scraped content.

The parallel between piracy and AI is striking. In both cases, the moral and legal questions hinge on perspective. If your country has no strict legal stance on either matter, the decision becomes personal: Would you rather pay hundreds of dollars for software, or use a free alternative, even if it’s pirated? Similarly, if an AI model were trained exclusively on licensed data, would you be willing to pay a premium to access it? Or would you prefer it to be cheaper, or free, even if that means its training involved pirated or publicly scraped content?

Then there’s the issue of public data. Setting aside high-profile examples like Meta being accused of using pirated books, we’re left with a simple question: If content is freely accessible on the internet, why is it controversial for an AI to learn from it? Humans do it every day. We observe, learn, and create, often drawing inspiration from what we’ve seen. Why is it more objectionable when a machine does the same?

Take art, for example. If a human studies thousands of drawings on DeviantArt and then creates something in a similar style, that’s considered learning. But if an AI does it, we cry foul. Is the issue really about copying, or is it about exposure? Maybe the real problem isn't theft at all, but the uncomfortable truth about visibility and value in the digital age.

So here’s the lingering question:

At what point does influence become theft and does that threshold change depending on whether the creator is human or machine? Or is it just a matter of how many people are watching?

Yes, this post was dressed up by a AI cause my words were all jumbled up....

If you want information, feign ignorance reply with the wrong answer. Internet people will correct you ASAP!

It’s OK if you disagree with me. I can’t force you to be right!

That is a good point that I didn't dive deeper in the article.

Briefly:

There's more nuance to that of course. For example, piracy doesn't always mean a lost sale - some people would never buy.

Worth discussing.

I did address that in the article.

We could make a similar discussion for human artists too.

AI does do it on a lot huger scale though (can't be compared), for profits (mostly, in one way or another).

🔧 BikeGremlin guides & resources

Many renaissance era artists copied each other for profit as well. Back then, they were "inspired" by each other and sold their painting for money. AI is just the new version of that...

And given the profit it generates, it's here to stay, whether we like it or not. Until it becomes too expensive to run, AI will replace most algorithms currently in use. There is a huge focus on advertising and recommending products via AI. So back to the adblock analogy, maybe soon we'll need AI blocker on top of our adblockers...

If you want information, feign ignorance reply with the wrong answer. Internet people will correct you ASAP!

It’s OK if you disagree with me. I can’t force you to be right!