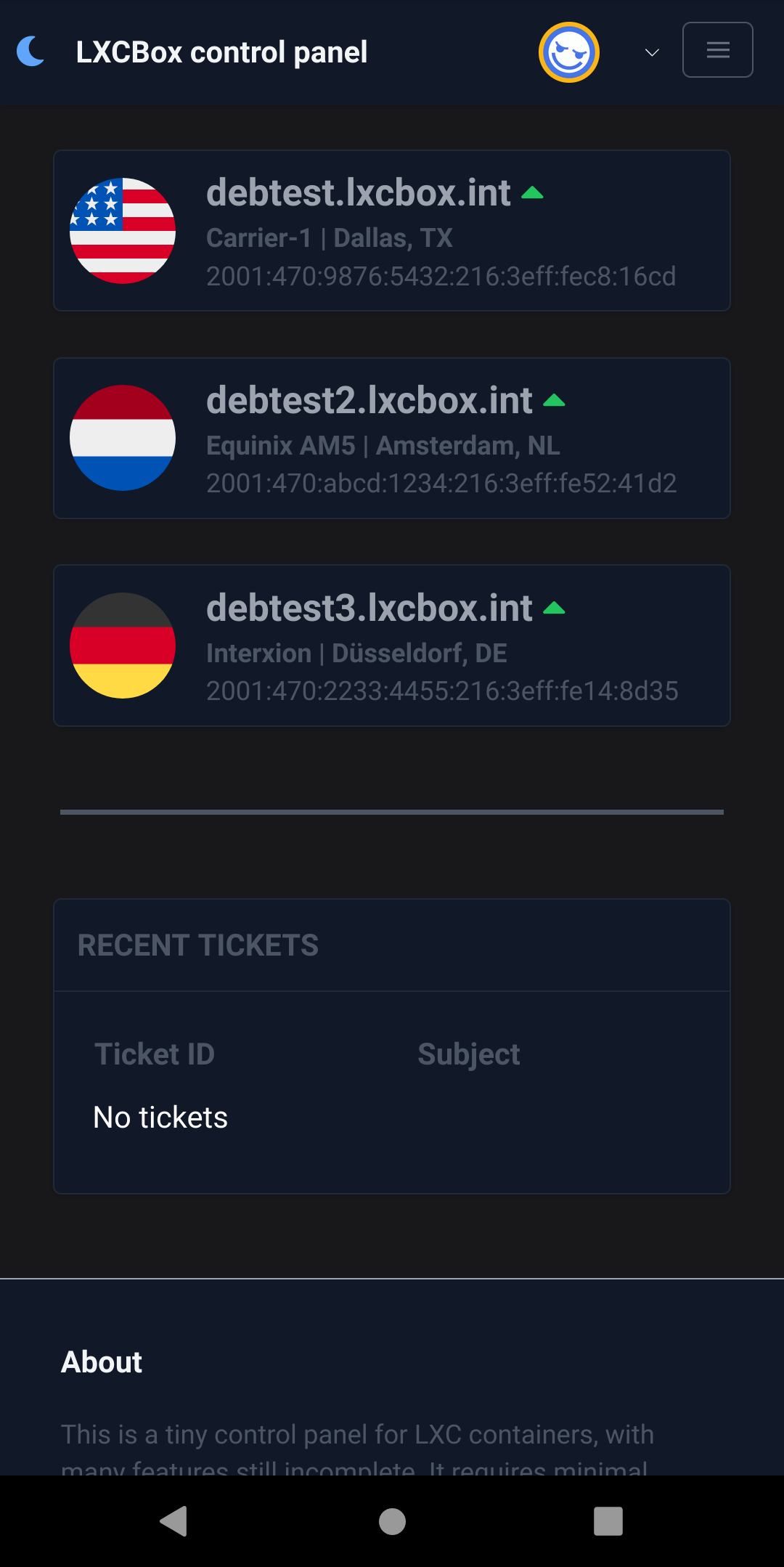

I kind of decided I should whip up some type of control panel. Seems nothing works well/properly on the low-end stuff I'm running. Proxmox certainly isn't going to be suitable.

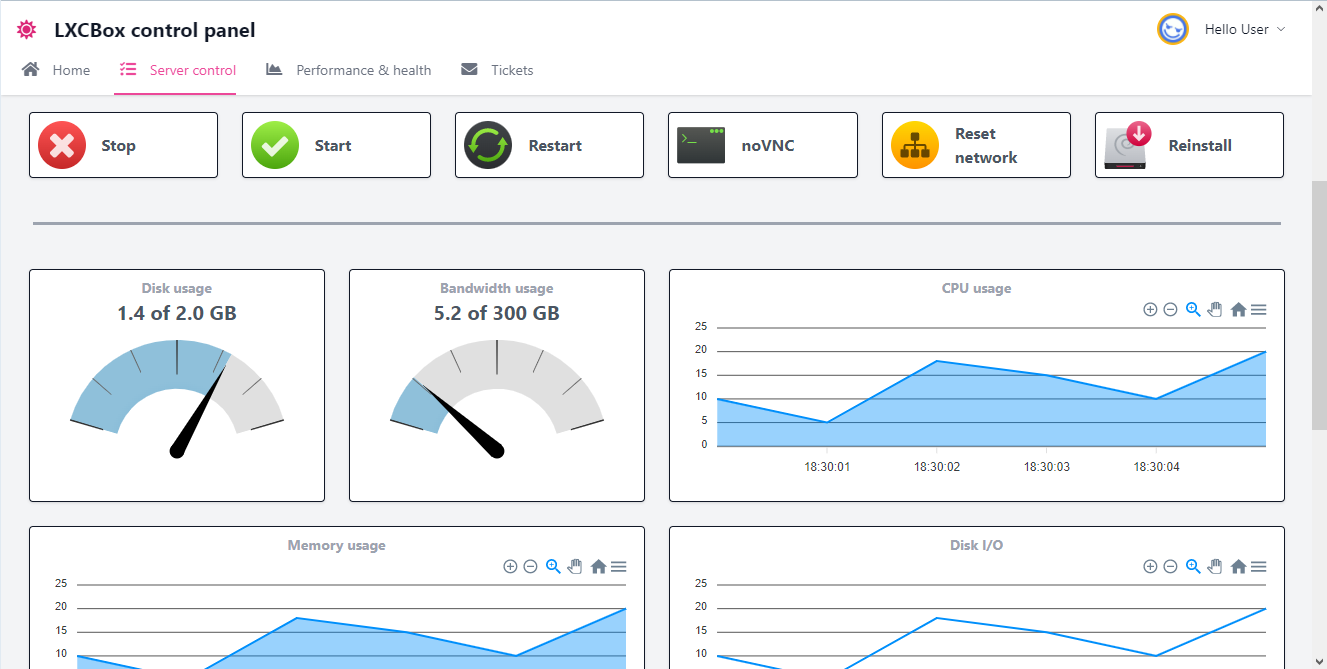

I'm the first to admit that it won't win prizes for UI, but this is what I got so far. Stats are obviously faked, I didn't do the Prometheus connector yet.

@tetech i encourage you to continue adding mocks with main important functions. Then start the backend API immediately. thats way you can be 70% complete better than nothing at all... so don't worry how it looks like now.

@ehab said: @tetech i encourage you to continue adding mocks with main important functions. Then start the backend API immediately. thats way you can be 70% complete better than nothing at all... so don't worry how it looks like now.

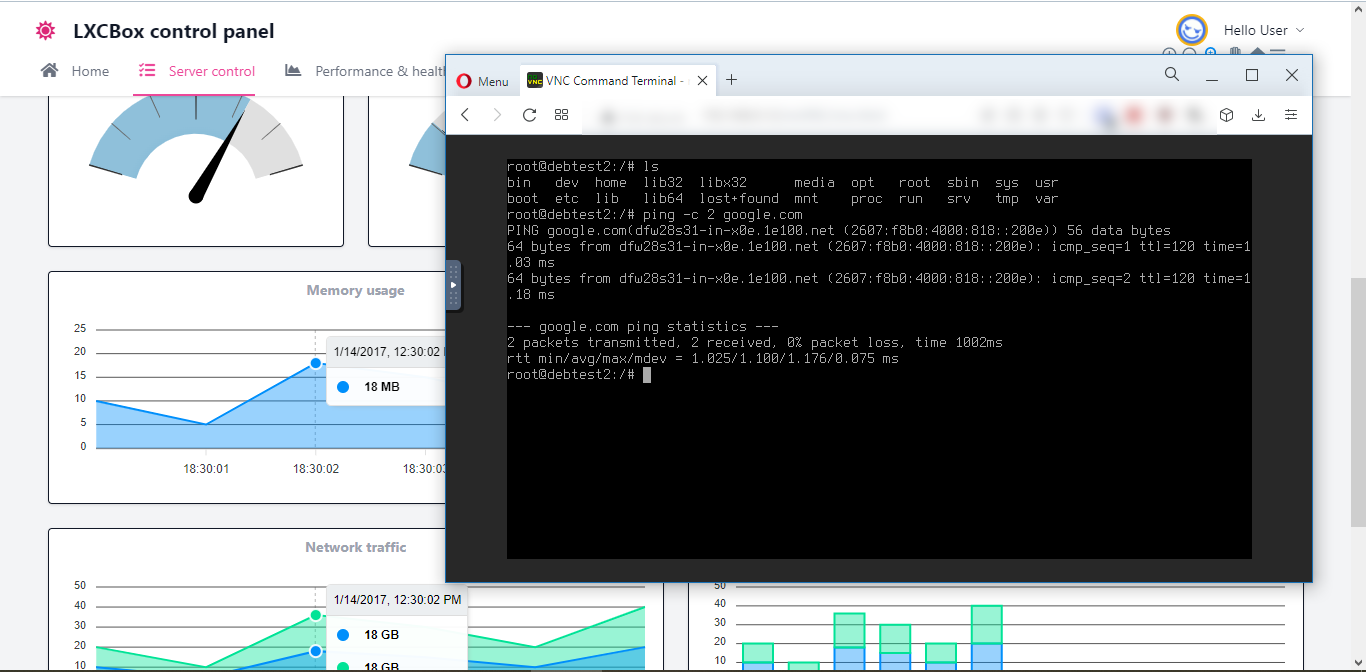

Oh, the important functionality is mostly done. Here's noVNC.

To be clear, my time budget for this is around 20 hours and I've already burned a third of it, so it won't get too fancy.

Minor changes to UI from the screenshots. A few new things like SSH key download.

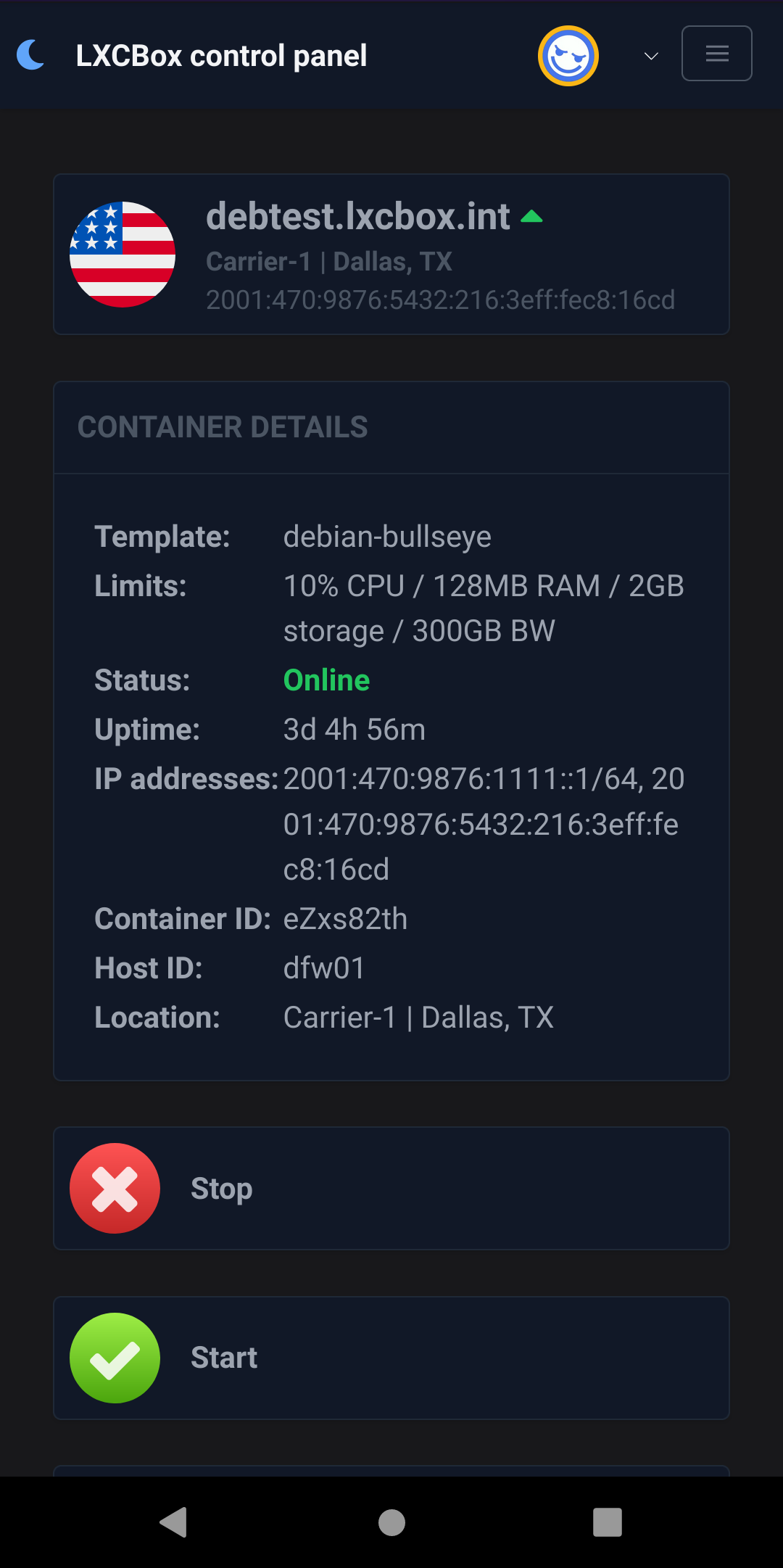

Control (start/stop/restart/noVNC) is complete.

Security is mostly finished - login/out, forgot/change password, verifying permissions in API, etc.

API is mostly complete (exceptions below) so it is displaying live data

To be finished:

The remaining things in the API are related to profile: changing avatar, updating preferences

Reinstall/reset network functionality not done yet

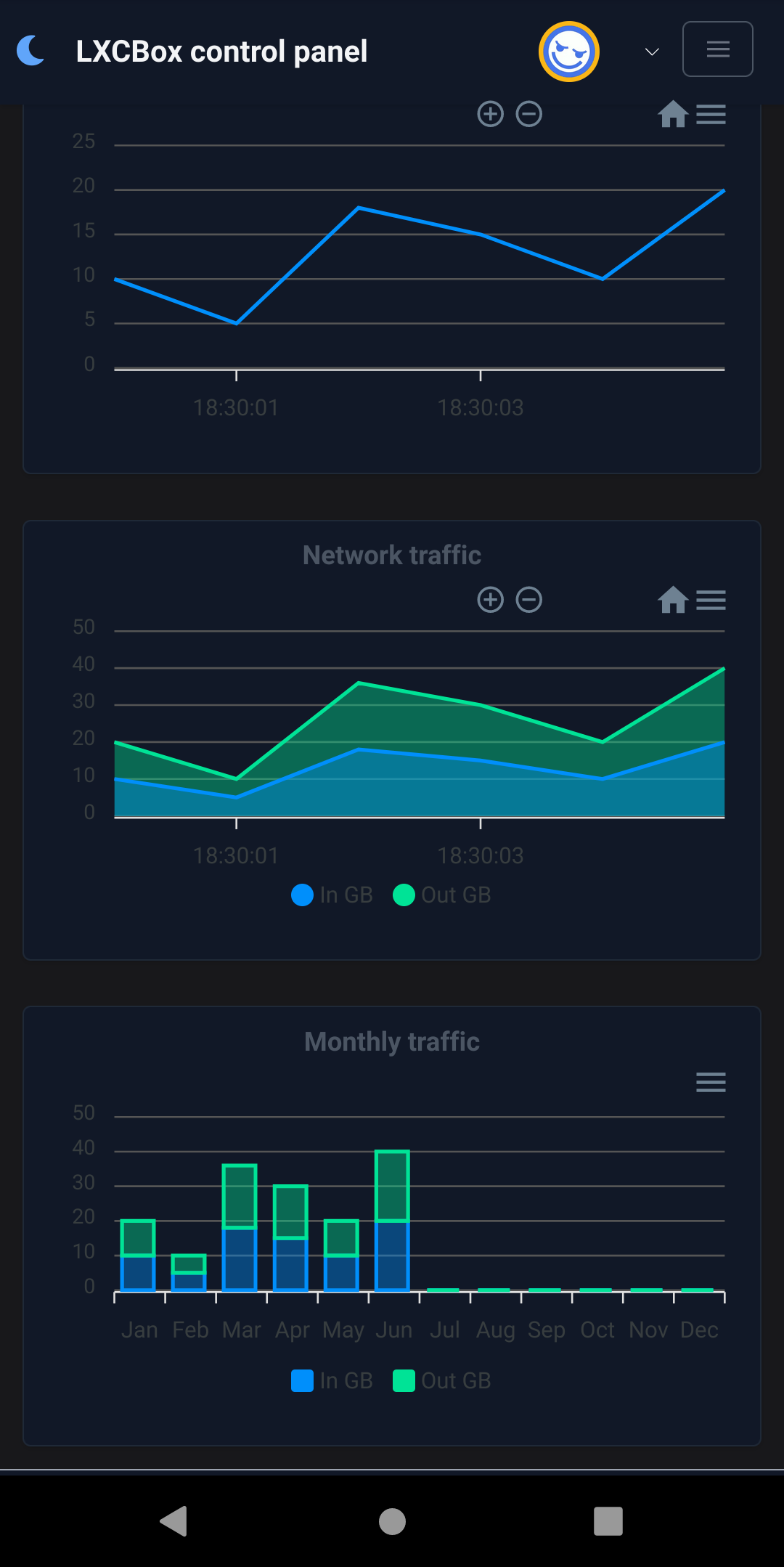

Stats are reported but not recorded (integration with Prometheus not done yet)

I came up with a longer list for the future (like 2FA) but the main goal of this is to get something super light-weight which might make mini containers feasible.

Prometheus integration is working, plus the profile/preferences. Fiddled with the UI a bit, but still don't claim it is good.

I'll set up a new node and optimize the memory usage a bit, then maybe it is time for someone else to take it for a test-drive. Memory is already not bad:

Quite possible, I think the initial NanoKVM Panel was 40 hours including Documentation.

If you know the stuff you are going to work with, its easy possible in this time.

The big time eater, is solving issues, if I did not had run into a few, I likely would be done faster.

(off-topic, but I remember finding a nice "night sky" monitor service from @Neoon on the og lowendspirit forum when I was learning the ropes on my first little ovz nat from deepnet solutions)

Looking for a volunteer/sucker/guinea pig to do an initial test. Same requirements as @Neoon,

Your account needs to be 6 months old

You need to have at least 50 Posts

You need to have at least 50 Likes

But I don't have a fancy bot so the invite/provisioning is manual at the moment. In terms of the actual LXC container, it would be 128 MB RAM in Chicago, most notably IPv6 only. You'll get a routed /64 from tunnelbroker. Anyone want to kick the tyres and share some thoughts? https://www.lxcbox.cloud

root@FG74Tru9:/# ping6 -c 10 cloudflare.com

PING cloudflare.com(2606:4700::6810:85e5 (2606:4700::6810:85e5)) 56 data bytes

64 bytes from 2606:4700::6810:85e5 (2606:4700::6810:85e5): icmp_seq=1 ttl=58 time=2.43 ms

64 bytes from 2606:4700::6810:85e5 (2606:4700::6810:85e5): icmp_seq=2 ttl=58 time=2.59 ms

64 bytes from 2606:4700::6810:85e5 (2606:4700::6810:85e5): icmp_seq=3 ttl=58 time=2.59 ms

64 bytes from 2606:4700::6810:85e5 (2606:4700::6810:85e5): icmp_seq=4 ttl=58 time=2.70 ms

64 bytes from 2606:4700::6810:85e5 (2606:4700::6810:85e5): icmp_seq=5 ttl=58 time=2.47 ms

64 bytes from 2606:4700::6810:85e5 (2606:4700::6810:85e5): icmp_seq=6 ttl=58 time=2.39 ms

64 bytes from 2606:4700::6810:85e5 (2606:4700::6810:85e5): icmp_seq=7 ttl=58 time=2.47 ms

64 bytes from 2606:4700::6810:85e5 (2606:4700::6810:85e5): icmp_seq=8 ttl=58 time=2.63 ms

64 bytes from 2606:4700::6810:85e5 (2606:4700::6810:85e5): icmp_seq=9 ttl=58 time=2.48 ms

64 bytes from 2606:4700::6810:85e5 (2606:4700::6810:85e5): icmp_seq=10 ttl=58 time=2.66 ms

--- cloudflare.com ping statistics ---

10 packets transmitted, 10 received, 0% packet loss, time 9097ms

rtt min/avg/max/mdev = 2.393/2.540/2.697/0.099 ms

@tetech said: volunteer/sucker/guinea pig to do an initial test.

Me. If you want. I don't need it for anything, but delighted to help you test! 🤩

Thanks! Happy for you to take it for a test drive for however long you want. Preference for template? Debian bullseye? (can't choose that yourself yet... probably next week)

First, a big thank you to @Not_Oles for testing! He's picked up several dumb errors and made good suggestions.

The ability to reset networking and reinstall the LXC container (same or new template) has been added to the control panel. Still a bit fragile but in the process of improving. You can (in theory) choose any of the available LXC templates.

Next thing when time permits is I'll let people provision their own container. It has a lot in common with reinstalling, so shouldn't be too much work.

I would say changing the firewall and killing network access to the container was a dumb error! But easily fixed.

The panel now allows users to provision their own containers. The way I'll do it (at least initially) is give each user a total "RAM budget", e.g. 128MB. You can allocate it on whichever node has free RAM, split it into two 64MB's, etc.

The amount of disk/bandwidth is given as a ratio "per 64MB of RAM". In the example below, it is 1GB disk and 0.5TB BW per 64MB RAM, so if you create a container with 128MB of RAM you'd get 2GB disk and 1TB RAM. The reason for doing it like this is that the plans of the KVM "host" are pretty different - one is 1GB RAM / 5GB NVMe, another is 0.5GB RAM / 250GB HDD. But RAM is almost always the most severe constraint.

Thanks again to @Not_Oles for valiant testing. I'm probably going to stop adding panel features and add more hosts to the pool, then take up @Mason's suggestion of a new thread.

@tetech said: I would say changing the firewall and killing network access to the container was a dumb error! But easily fixed.

Not to be a picky jerk, but, really, testing a firewall seems like a smart idea! I always try a little testing to make sure my firewall seems to be doing what I think it's supposed to be doing. So I think this was a super excellent mistake to make! 🤩

Yeah, the basic principle is to share the free resource fairly but give users flexibility on how/where to allocate it.

Another thing in the "idea bank" is to allow users to choose their balloon memory. So you can over-allocate beyond your 128MB, and the OOM killer will penalize you more based on how much/how long you've been above the allocation. This becomes more like accounting for actual resource usage rather than provisioned resources.

Comments

I kind of decided I should whip up some type of control panel. Seems nothing works well/properly on the low-end stuff I'm running. Proxmox certainly isn't going to be suitable.

I'm the first to admit that it won't win prizes for UI, but this is what I got so far. Stats are obviously faked, I didn't do the Prometheus connector yet.

@tetech i encourage you to continue adding mocks with main important functions. Then start the backend API immediately. thats way you can be 70% complete better than nothing at all... so don't worry how it looks like now.

Oh, the important functionality is mostly done. Here's noVNC.

To be clear, my time budget for this is around 20 hours and I've already burned a third of it, so it won't get too fancy.

Status this morning:

To be finished:

I came up with a longer list for the future (like 2FA) but the main goal of this is to get something super light-weight which might make mini containers feasible.

Prometheus integration is working, plus the profile/preferences. Fiddled with the UI a bit, but still don't claim it is good.

I'll set up a new node and optimize the memory usage a bit, then maybe it is time for someone else to take it for a test-drive. Memory is already not bad:

So under 100MB of RAM used for two LXC containers, and that includes 20MB for Prometheus monitoring.

Seems like a lot has been accomplished in a short time! Congrats! 🎉

I hope everyone gets the servers they want!

It blew out to at least 30 I had to learn the Prometheus API from scratch and after that I refactored some things.

I had to learn the Prometheus API from scratch and after that I refactored some things.

It's okay! Lots of folks like Prometheus so you learned something useful! 🆗

I hope everyone gets the servers they want!

Quite possible, I think the initial NanoKVM Panel was 40 hours including Documentation.

If you know the stuff you are going to work with, its easy possible in this time.

The big time eater, is solving issues, if I did not had run into a few, I likely would be done faster.

Free NAT KVM | Free NAT LXC

(off-topic, but I remember finding a nice "night sky" monitor service from @Neoon on the og lowendspirit forum when I was learning the ropes on my first little ovz nat from deepnet solutions)

HS4LIFE (+ (* 3 4) (* 5 6))

Yeah, agreed.

Looking for a volunteer/sucker/guinea pig to do an initial test. Same requirements as @Neoon,

But I don't have a fancy bot so the invite/provisioning is manual at the moment. In terms of the actual LXC container, it would be 128 MB RAM in Chicago, most notably IPv6 only. You'll get a routed /64 from tunnelbroker. Anyone want to kick the tyres and share some thoughts? https://www.lxcbox.cloud

May be worth opening a new thread with all this info depending on how many users you want to bring on since it's pretty well buried in this thread

Good luck!! Panel looks great

Head Janitor @ LES • About • Rules • Support

Me. If you want. I don't need it for anything, but delighted to help you test! 🤩

I hope everyone gets the servers they want!

Thanks for the encouragement. I don't consider being inconspicuous a bad thing at the moment

That may change, but at first I'd like to do a sanity check of whether the containers work and I haven't done a major screw-up!

Thanks! Happy for you to take it for a test drive for however long you want. Preference for template? Debian bullseye? (can't choose that yourself yet... probably next week)

Sure, Debian, please. Will be fun! 🎉

I expect to be busy Monday, but other days next week should be fine.

Have a nice weekend!

I hope everyone gets the servers they want!

First, a big thank you to @Not_Oles for testing! He's picked up several dumb errors and made good suggestions.

The ability to reset networking and reinstall the LXC container (same or new template) has been added to the control panel. Still a bit fragile but in the process of improving. You can (in theory) choose any of the available LXC templates.

Next thing when time permits is I'll let people provision their own container. It has a lot in common with reinstalling, so shouldn't be too much work.

Hi @tetech! You're welcome! It's fun to work with you! I appreciate your prompt replies and kind suggestions!

I didn't see any dumb errors! It all looks pretty good to me! ✅

It all looks pretty good to me! ✅

Best wishes and kindest regards!

I hope everyone gets the servers they want!

I would say changing the firewall and killing network access to the container was a dumb error! But easily fixed.

But easily fixed.

The panel now allows users to provision their own containers. The way I'll do it (at least initially) is give each user a total "RAM budget", e.g. 128MB. You can allocate it on whichever node has free RAM, split it into two 64MB's, etc.

The amount of disk/bandwidth is given as a ratio "per 64MB of RAM". In the example below, it is 1GB disk and 0.5TB BW per 64MB RAM, so if you create a container with 128MB of RAM you'd get 2GB disk and 1TB RAM. The reason for doing it like this is that the plans of the KVM "host" are pretty different - one is 1GB RAM / 5GB NVMe, another is 0.5GB RAM / 250GB HDD. But RAM is almost always the most severe constraint.

Thanks again to @Not_Oles for valiant testing. I'm probably going to stop adding panel features and add more hosts to the pool, then take up @Mason's suggestion of a new thread.

Not to be a picky jerk, but, really, testing a firewall seems like a smart idea! I always try a little testing to make sure my firewall seems to be doing what I think it's supposed to be doing. So I think this was a super excellent mistake to make! 🤩

Thanks to @tetech for letting me help test! 💖

I hope everyone gets the servers they want!

I kinda like the pool idea doh.

Free NAT KVM | Free NAT LXC

Yeah, the basic principle is to share the free resource fairly but give users flexibility on how/where to allocate it.

Another thing in the "idea bank" is to allow users to choose their balloon memory. So you can over-allocate beyond your 128MB, and the OOM killer will penalize you more based on how much/how long you've been above the allocation. This becomes more like accounting for actual resource usage rather than provisioned resources.